Patch Production Faster with Security-oriented Agile Development Practices

Overview

All computer security depends on software application security. Some believe that Agile-inspired development methodologies should not be used to implement a Secure Software Development Lifecycle (SSDLC). There are many reasons for this, including experience with poor Agile method implementations which resulted in: software teams who ship very insecure code, teams composed of members with limited security knowledge writing only happy path user stories and tests, and a preference for a top-down approach to security requirements.

In fact, software development teams practicing Agile methodologies are not only just as capable of practicing good software security but with certain practices – such as test-driven development – are more capable of responding to the current threat landscape. These practices in turn, drastically reduce the number of days required to patch a vulnerability in production web applications. Agile teams are able to develop, test, deploy, and maintain software systems that require extra security.

One of the poignant challenges facing organizations today is the number of days it takes to update production software after the discovery of a vulnerability during which successful cyberattacks impact the organization prior to a fix. In this paper, I will show how following key Agile development practices along with implementing continuous integration & deployment achieve significant improvements in the time-to-patch delay.

Must Reduce Days to Patch a Vulnerability

According to research by TCell (Security Report for In-Production Web Applications. Retrieved 2/25/2019), in 2018, the average time between the discovery of a security vulnerability in production web applications and when its patch in production took more than a month.

| Severity Level | Average Days to Patch |

|---|---|

| All (Regardless of Severity) | 38 days |

| High | 34 days |

| Medium | 39 days |

| Low | 54 days |

| Oldest unpatched CVE | 340 days |

The perils of slow to patch vulnerabilities cannot be understated. The huge Equifax data breach in 2017 was entirely preventable. The breach was a direct result of the failure to patch a known, fixed vulnerability in the Apache Struts application framework (Newman, Lily Hay. Equifax Officially Has No Excuse. Retrieved 2/25/2019.). When a Common Vulnerabilities and Exposures (CVE) notice is released for a major framework like Struts or Ruby on Rails, publicly accessible web applications built on those technologies enter a race with malicious adversaries who scour the web with automated crawlers looking for vulnerable sites. It is highly likely that a website with a unpatched vulnerabilities will be compromised prior to the patch being applied. Even if the site survives the initial onslaught out of sheer luck, these known vulnerabilities are some of the first that a targeted attack will exploit. The only defense is to patch.

One of the reasons why it takes so long to patch in these environments is not that these vulnerabilities represent substantially tricky code changes, but rather that, it takes a long time to test the software for regressions and then deploy the software to production. These are precisely the problems that key practices among the Agile community can solve.

Key Practices

Security Awareness for Developers

One of the first items that must be handled is to implement an Agile SSDLC, this is to make sure that all developers and product owners have a basic understanding of the primary ways that web applications get hacked. There is a finite list of things that if taken care, will drastically reduce the attack surface area of the application. The TCell report concluded that the top five most common incidents in their survey sample were:

- Cross-Site Scripting (XSS)

- SQL Injection (SQLi)

- Automated Threats

- File Path Traversal

- Command Injection (CMDi)

All of these threats are all among the OWASP Top 10, a publication that details the most common vulnerabilities that lead to compromised web applications and is regularly updated by volunteers from the application security community. The publication was first published in 2003, just two years after the Agile manifesto, and yet the issues outlined have remained remarkably consistent. Developers are making the exact same security mistakes over and over again. We’re not getting better with the passing of time. Additionally, the OWASP Application Security Verification Standard (ASVS) is an important tool for performing a risk-limiting audit of applications based on the appropriate security level.

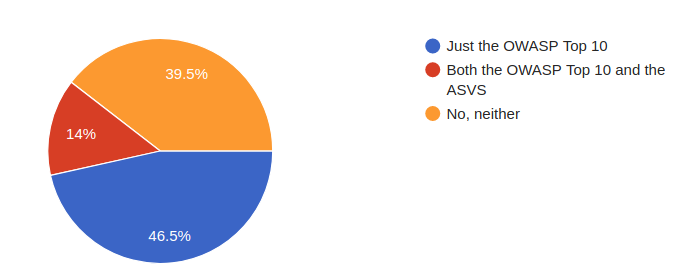

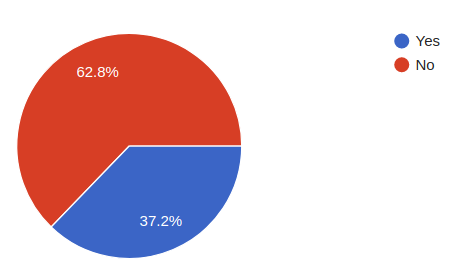

As an industry, we have a long way to go. In 2017, I conducted a small informal survey of paid professional software developers in the Atlanta area via the Slack chat community. These are professionals who are being paid to create websites, mobile iOS and Android applications, and the associated API servers. With a sample size of forty-three respondents, 90% indicated that their organizations are concerned with cybersecurity, but only 60% had even heard of the OWASP material and most do not run basic vulnerability scanners on their applications.

Have you heard of the OWASP Top 10 or the Application Security Verification Standard (ASVS)

Do you or someone on your team run automatic security scanners that scan the source code for common security vulnerabilities?

Basic security knowledge of the OWASP Top 10 and ensuring that automated vulnerability scanners are in place are just the minimum acceptable effort of dealing with application security today.

Writing Concise User and Abuser Stories

One of the biggest challenges when including security in an Agile development process is that it is often seen as a non-functional requirement. That usually would not be a problem except that software requirements, in user story form are extremely short, positive statements that create customer value of the form:

"As an inside sales agent, I want to be reminded periodically about clients I have not followed up with recently, so that I can keep my leads from going cold."Rietta, Frank. How to Use Story Points to Estimate a Web Application Minimum Viable Product. 2015.

The classic idea is to capture a written description for planning and as a reminder. Later when a developer starts to work on the story, they will have conversations with the product owner to flesh out the details of the story and its implementation.

A mistake I’ve seen many teams make is to express all user stories as feature-focused happy path user stories. That is all the product owner cares about and that’s all the developers focus on. This stumbling block can be easily overcome, by documenting non-functional requirements as systems constraints ( Cohn, Mike. User Stories Applied for Agile Software Development. Pearson Education, 2004. pp 177-178. ). The two best ways to do this is to include the constraint (or as an extension in use case terminology ( Cockburn, Alistair. Writing Effective Use Cases. Addison-Wesley, 2001. pp 100.) ) on the back of the story card and part of that card’s user acceptance test. For example:

"As a user, I want to search my address book by name."Constraints / Security

- Utilize prepared statement to avoid SQL injection. Concatenating SQL strings will be rejected in code review.

- All user supplied input must be passed through sanitize() to render in the HTML view

An abuser story is a user story from the point of view of a malicious adversary. This is a more recent practice is are an adaptation of the abuse cases that were introduced by Hope, McGraw, and Anton to address the fact that your requirement gathering process must go beyond considering the desired features (Hope, Paco, McGraw, Anton. (2004) Misuse and Abuse Cases: Getting Past the Positive. IEEE Security & Privacy (Volume:2 , Issue: 3). Retieved 2/25/2019.). Every time a feature is described someone should spend some time thinking about how that feature might be unintentionally misused or intentionally abused. Abuser stories appear to have been re-invented by multiple authors based on this original idea with various names, including Evil User Stories, Abuse Stories, and Abuser Stories. Johan Peeters appears to have used the term as early as 2008 ( Peeters, Johan. Agile Security Requirements Engineering. 2008. Retrieved 2/25/2019.).

An example of an abuser story is the URL Tweaker:

"As an Authenticated Customer, I see what looks like my account number in the URL, so I change it to another number to see what will happen."Rietta, Frank. What Is an Abuser Story (Software). 2015.

This negative user story exercises the presence of a Direct-Object Reference vulnerability and rejects the refrain that “no one would ever do this,” which is tempting for non-technical stakeholders to posit. It can be scheduled the same as any other work in the process and should have automated tests to exercise it. Those tests could be expressed on the back of the card as:

- Authenticate as a Customer User

- Expect to receive an HTTP Status 200 OK

- Submit GET request with the account number of another account in the URL

- Expect to receive an HTTP Status 401 Unauthorized.

Including security requirements as constraints on user stories and as part of stand alone stories goes a long way to ensuring that your Agile development team addresses these considerations.

Test Driven Development for Extensive Automated Tests

The Agile practice with the biggest impact in software application security is test-driven development. Consider what happens in a traditional application that was built without sufficient automated tests or one where its automated test suite has fallen into a state of disrepair. Without sufficient working automated tests, patching is very hard because all of the functionality of the system must be manually tested for regressions before the updated software can be deployed (this is a big reason why low severity vulnerabilities took 54 days on average to patch).

The best way to patch more quickly is to have a thorough comprehensive automated

test suite that exercises all functionality of the system, tests for any

possible regressions in functionality, and runs continually as changes are made

so that the developer can see if he or she has made any breaking changes (this

last part is covered in the next section on CI/CD). In my experience, I agree

with Robert Martin when he said that it is irresponsible for a developer to

ship a line of code that [he or she] has not executed any unit test for, and one

of the best ways to make sure you have not shipped a line of code without

testing is to practice TDD

(Martin, Robert. Video of TDD Debate

with Jim Coplien. 2012. Retrieved 8/14/2018.).

A test driven development practice also provides a substantial level of regression testing around security controls so that other developers do not remove those protections in the name of optimization or expediency unawares of the consequences. For example, it is not uncommon for one developer on a Ruby on Rails team to know preventing Cross-Site Scripting (XSS) is important and therefore he or she always uses the ActionView::Helpers::SanitizeHelper like:

<h2>Comment by <%= comment.author %></h2>

<%= sanitize comment.body %>

The call to sanitize loads the HTML into a parser and removes all but safely whitelisting HTML tags like bold, italic, basic links. Those can be expanded through customization, but later another (more junior perhaps) developer is tasked with enhancing the feature to allow HTML tables to be included in the comments so he or she changes this code to read:

<h2>Comment by <%= comment.author %></h2>

<%= raw comment.body %>

The problem is exacerbated when the XSS vulnerability is not treated as a requirement in the user story and its acceptance tests. It was just something that the first developer knew to do out of habit. Without an automated test, the second developer never knows that a serious bug had been introduced with this change and the software testing team does not manually test this as part of their manual tests. However, the first developer can easily write an automated test based on their knowledge of the XSS threat like:

require 'rails_helper'

RSpec.describe CommentsController, type: :request do

it 'should not allow XSS script injection' do

post(

comments_path,

params: {

comment: {

body: '<script>console.log("I'm an evil hackerman");</script>

}

}

)

expect(response).to have_http_status :created

expect(response.body).to_not contain '<script>'

end

end

When a team practices test driven development, the automated test suite grows overtime and consists of substantial automated test coverage. The power of this test coverage changes the ball game when CI/CD is introduced.

Continuous Integration / Continuous Deployment

Continuous Integration is a process by which anytime source code changes, an automated process checks it out and runs the full automated test suite. Many Agile teams will use this as a step in their code review or source code management flow, such as blocking a Git pull request until it has been reviewed by another developer and all automated tests pass. A smaller set of teams go the extra distance and implement Continuous Deployment such that when all of the tests pass and the code is merged, an automated process deploys the resulting software to production dozens or even hundreds of times per day. Most enterprises are way behind on this practice. This is the opposite of those who struggle to patch a security vulnerability in less than 38 days on average.

CI/CD depends upon all of the Agile processes to work most effectively. Developers working on appropriately signed pieces of functionality. Writing sufficient automated tests that the CI/CD can test and deploy with confidence. But what happens when there is a critical security vulnerability the framework or another 3rd party dependency of a application that was written with TDD and has CI/CD in place? When there is a patch, the CI/CD environment can download it and run the entire test suite and if all tests pass deploy to production without human intervention. This means that this class of security patch can be deployed in minutes, not days, and certainly not months.

What about a vulnerability that requires developer intervention? That’s also easier. The developer considers the security defect, writes a failing test that exercises the vulnerability, and then writes a fix. Once the code passes code review, the CI/CD environment runs all automated tests and when passed, deploys the update to production without any further human intervention. The vulnerability can now be patched within a couple of days rather than taking over a month to get through development and QA.

This is a game changer. Ask yourself what would have happened in 2017 if Equifax would have had this capability in their environment that was compromised because of a not-yet-patched 3rd party vulnerability in their dependency tree.

Conclusion

As an industry, it is vital that more organizations implement Agile-based development methodologies to decrease the time to patch from 38 days down to hours if not minutes. This can only happen if CI/CD becomes the norm rather than the exception. To implement CI/CD effectively requires extremely thorough automated tests that more organizations should have as part of their current development practices. The best way to achieve this level of automated test coverage is to have development teams practice test-driven development informed by security training for both developers and product owners.